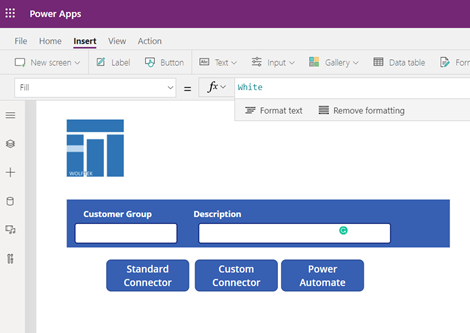

In this short post we wanted to offer some clarifications and options for managing the connections to D365 from the Power Platform applications. The same D365 connector is leveraged in all applications that are part of the Power suite. Just a note for the techies who like the inner workings of things, as far as the connection details, including authentication credential and tokens, the Power Platform connectors store the details behind the scenes in a APIM (Azure’s API Management).

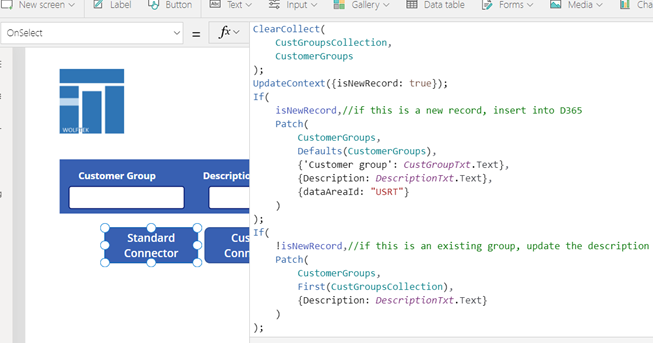

When dealing with connecting to D365 the typical use is to leverage the standard connector and configure it to leverage a generic service account. In terms of authentication this means that the Power Platform application will authenticate to AAD to get the access token, with every call to D365. Easy, convenient – takes your worries away from having to refresh the AAD tokens, which are by default set to expire within 60 minutes.

Alternatively, if you choose to connect to D365, or for that matter to any other API, which leverages AAD Authentication, using OData or RESTful Http service calls, you have to decide what makes sense in terms of access token refreshes. In most cases, again, an authentication made with every service call should be sufficient. However, if you need to be more expressive with your flow – perhaps for performance reasons – you may want to cache the access token for reuse. One straightforward way to handle it is to store the access token in a local variable the first time the call to the API is made, and then reuse it on subsequent calls. The assumption here being that the application runs for a reasonably short time, shorter than the expiration of the token.

Another, more intricate approach, which solves the issue of reusing the access token across multiple executions of the same application is to store the access token externally – for example in an Azure KeyVault. This approach also requires that you implement logic to refresh the token when it expires. For inspiration see the following blog post.